[av_textblock size=” font_color=” color=”]

SEO Tactic #4: Boost Rankings by Consolidating Duplicate Content

This is the fourth part of a 15-part series. The previous tip was about optimizing your meta descriptions to boost click-through rates. In this tactic, we’ll help you increase your organic traffic by dealing with duplicate content.

But what is duplicate content?

There are two kinds of duplicate content, broadly speaking, but only one that’s relevant to ecommerce. We won’t be talking about intentionally plagiarized content today, because even though that’s disastrous for your reputation, the odds that any respectable ecommerce site is a party to plagiarism are basically nil.

The other type of duplicate content doesn’t incur the ever-dreaded Google penalties, but it still doesn’t do you any favors. If your website has two or more different URLs that have the same page content, that’s duplicate content. Here are some common examples:

- www.example.com/product.html and www.example.com/category/product.html

- example.com/product.html and www.example.com/product.html

- HTTP and HTTPS versions

- Faceted navigation (especially when it results in endless combinations of parameters)

- Different URLs for different color/size combinations

- Session identifiers

A small amount of duplicate content isn’t a terrible thing. But sometimes, especially on ecommerce sites, the problem is not small at all. For some sites, it’s very, very large. When this happens, it becomes a major drain on your organic traffic. Why is duplicate content such a big problem? Two main reasons:

- Some of your pages may not be indexed. Google allocates a limited “crawl budget” to your site. Duplicate pages waste Googlebot’s time, decreasing the likelihood that it will visit all of your pages. Some of your pages will not be indexed, and therefore, will not appear in search results at all. Ecommerce sites are particularly at risk for this because of the large number of pages that they typically have.

- Page reputation is diluted. One of the biggest ranking factors is the number of external links to the page, so I’ll use that as an example. Sometimes this is referred to as link equity. When you have multiple copies of the same page, each copy may be receiving links. Let’s say you have three copies with ten links each. Search engines will choose one copy to display in search engine results, and rank it as if it’s receiving ten external links. If you had a single copy, it would rank as if it had thirty links. The link equity is spread thin across all the copies of the page, like too little peanut butter on a slice of toast.

Mmm, peanut butter

[/av_textblock]

[av_promobox button=’yes’ label=’Sign up here!’ link=’manually,https://www.ranksense.com/blog-list’ link_target=” color=’custom’ custom_bg=’#ffffff’ custom_font=’#1c98e0′ size=’large’ icon_select=’no’ icon=’ue800′ font=’entypo-fontello’ box_color=’custom’ box_custom_font=’#ffffff’ box_custom_bg=’#1c98e0′ box_custom_border=’#1c98e0′]

This is part 4 of a 15-part series to help you improve your ecommerce site’s SEO. Sign up to be notified when a new tip is published!

[/av_promobox]

[av_textblock size=” font_color=” color=”]

The goal of this tactic

Our goal is to improve organic rankings by consolidating page reputation.

Overview

To consolidate page reputation, we’re going to use three methods: adding canonical tags, implementing 301 redirects, and configuring URL parameters.

Canonical tags are short pieces of metadata that tell search engines which version of the site is the “preferred” version or the main one. Adding a canonical tag to each duplicate will tell search engines to pass their reputation to that preferred URL. This way, the main version gets all the reputation – so there’s plenty of peanut butter on the toast. Canonical tags are invisible to the user and don’t interfere with user experience.

On the other hand, 301 redirects actually send users and search engines along to a different URL. These are more appropriate when you don’t really need one version of the page. For example, if you are moving your site to HTTPS, you’ll want users who click old HTTP links to be forwarded to the HTTPS equivalent. Using 301 redirects consolidates reputation into your preferred page. However, you shouldn’t use 301 redirects for near-duplicates, such as color variations.

URL parameters are added onto URLs with an ampersand (&). These are used for a number of things, such as to track customers as they use the site and to enhance user experience. Often parameters are a critical part of what allows the website to function. Unfortunately, they can generate a lot of duplicate content. Proper configuration of these parameters is an easy way to cut down on unnecessary duplicates.

Clever usage of canonicals, 301 redirects, and URL parameter configuration is an important part of SEO, especially for ecommerce sites. Without them, all the duplicates are competing against each other in search engines. With them, all the duplicates are working as a team.

How to use this tactic

Step 1 – Assess the problem

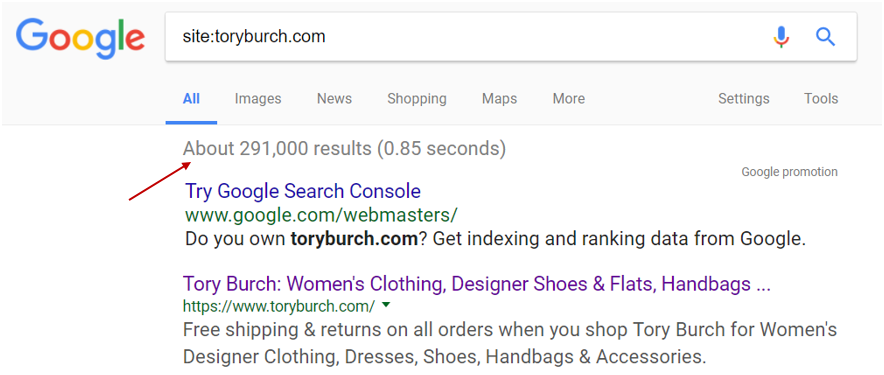

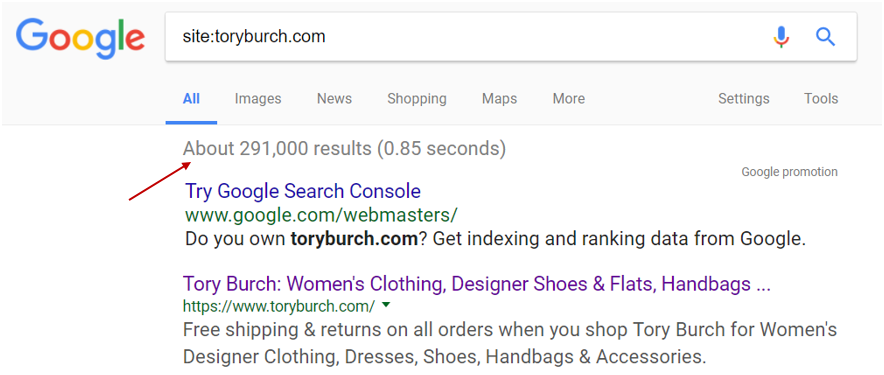

To determine the scope of the problem on your site, go to Google and enter site:yoursitename.com. Doing a site: search asks Google to find every page in on your site. Above the first result, Google will tell you how many pages it found in your domain.

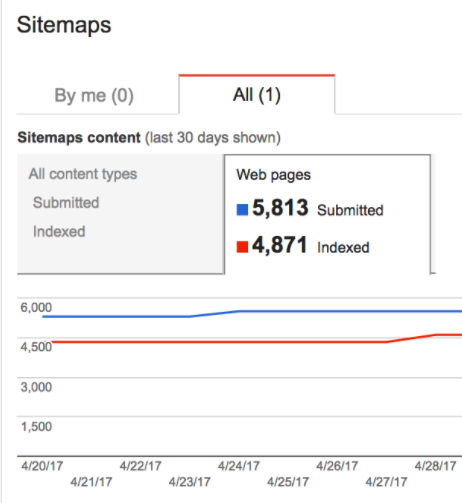

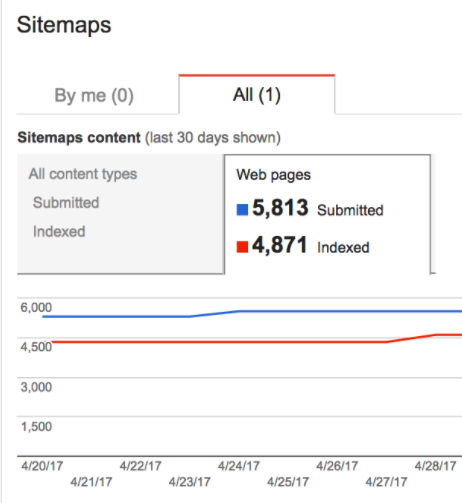

For many ecommerce sites, this number will be 5 or 6 digits, sometimes more. So, how big is too big? As an ecommerce site, most of your pages will be products, so if this number greatly exceeds the number of products you offer, you likely have duplicate content. If your site has a comprehensive sitemap, you can compare the site: search result to the number of pages in the sitemap (go to Google Search Console > Crawl > Sitemaps).

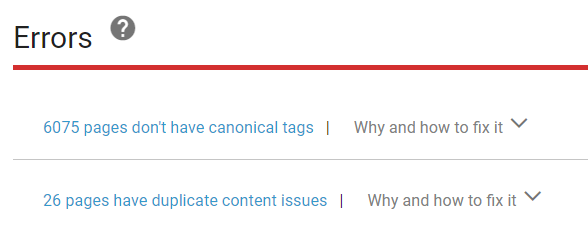

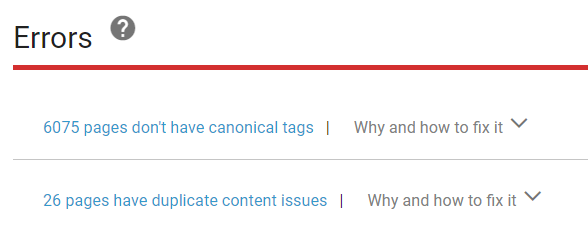

However, you’ll need to actually find which pages have issues. One of the easiest ways to do this is to use our free SEO scan. Your report will contain information about missing canonical tags, duplicate content, and other SEO issues. We’ll scan up to 10,000 pages on your site for free. We’ll detail how to use your report in an upcoming post, but for now, you’ll be looking for the number of pages without canonical tags and the number of pages with duplicate content.

There are paid tools available that can do a similar scan of your site, but not all of them report missing canonical tags, so check before you sign up.

Step 2 – Add canonical tags

Let’s say you have a page with multiple copies:

These are URLs that are still necessary to your site, so you can’t just delete the duplicates. The second URL preserves breadcrumbs which aids user navigation, and the third changes the color of the product. However, we still want to consolidate the reputation from all three pages. The first one is the simplest URL, so it’s the best choice to be the canonical version. So you would add the following canonical tag to the header of all three pages:

The canonical tag tells search engines that the main version of the page is found at https:///www.example.com/product.html. For every group of duplicates, you will choose one version to be the “preferred” version. You’ll then add canonical tags that point to the preferred version to all the duplicates (including the preferred one).

You may be wondering why we added the canonical tag to all three URLs, instead of just the second and third. This is called a self-referential canonical tag because the tag refers to the same page that it’s on. Although we’re talking about canonical tags in the context of duplicate content, we actually recommend including canonicals on every single page that should be indexed – even if there’s only one copy of the page.

The reason for this is because consistency improves your reputation with search engines. When you use a standard format for the canonical URLs on all of your pages (i.e. always HTTPS, always begins with www), your site appears more trustworthy to search engines. Including self-referential canonical tags on the preferred (or only) version increases the signal of consistency and standardization.

Make sure to always choose the simplest URL to be the canonical version. In general, we recommend to use URLs that begin with https://www.yoursitename.com. Keep in mind that Google prefers HTTPS over HTTP. Google also recommends against relative references (where you omit the beginning of the URL) to avoid errors.

Step 3 – Add 301 redirects

In step 2, we looked at duplicate URLs that are necessary for user experience. However, odds are good that you have duplicate URLs that aren’t necessary to preserve. Rather than duplicate the page, you can use a 301 redirect to the main page. Here are some examples of good candidates for 301 redirects:

Adding 301 redirects will ensure that visitors and search engines are forwarded to the main URL automatically. These will consolidate reputation from the duplicates into the main version. In order to add 301 redirects, you’ll need to make changes to your server’s files.

All three of these examples are cases where you would need to apply 301 redirects to a large batch of URLs (i.e. every HTTP version on your site). Our CEO, Hamlet Batista, recently explained how to implement large numbers of redirects all at once over at Practical Ecommerce. That article contains recipes to add 301 redirects en masse using an application called RewriteMap.

Step 4 – Configure URL parameters

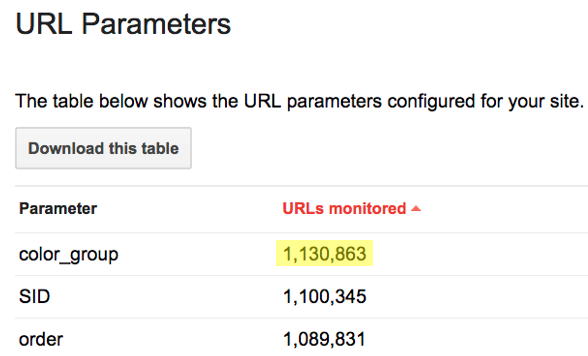

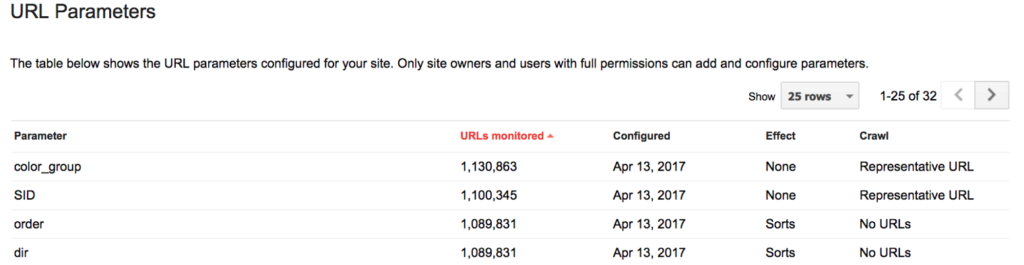

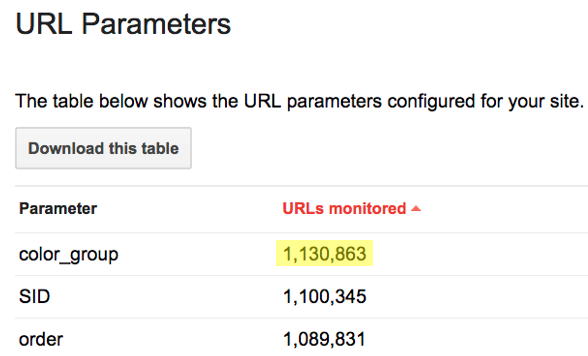

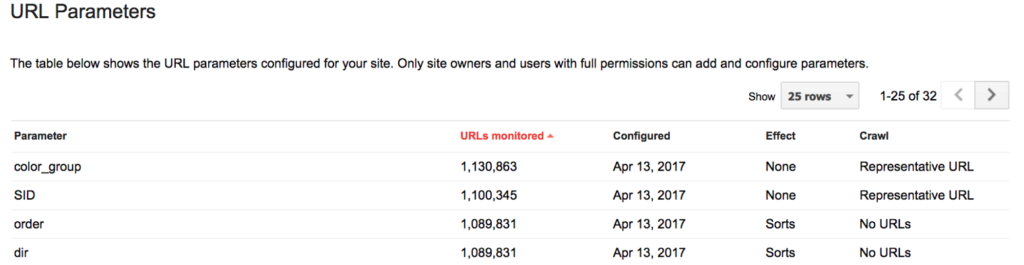

If your site uses URL parameters for navigation or user experience, they may be generating duplicate content. In some cases, it’s the cause of a huge amount of duplicate content.

One of our clients had over a million URLs generated by a color parameter. They had other parameters creating duplicate content as well – the color parameter was just the worst offender. Ecommerce sites often use parameters for:

- Faceted navigation, i.e. filtering a list of products by color, size, etc., typically on a category page

- Tracking, i.e. session ID

- Product selection, i.e. selecting the color/size of a product on a product page

- Pagination, i.e. navigating between pages of products

This particular client has less than 6,000 pages in their sitemap, but Google had a million pages indexed. There were two main consequences of this:

- Google picks up changes to the site very slowly, because Googlebot crawls 24,000 pages per day on average (see Search Console > Crawl > Crawl Stats for this number). It takes Googlebot 41 days to go through all of the duplicates. This means that it takes a long time to see any effect from SEO improvements (or to catch a disaster in the making).

- Google doesn’t index all of their “real” pages because there are so many duplicates to weed through. In this particular case, more than a thousand pages (mostly products) were not indexed and thus, would not appear in any search results. To see how many of your actual pages are indexed, go to Google Search Console > Crawl > Sitemaps.

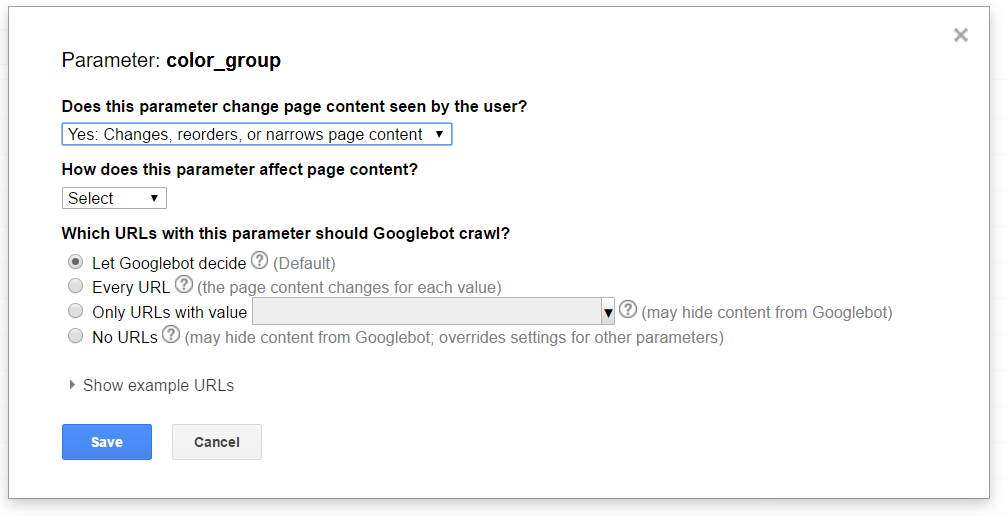

Fortunately, it’s easy to configure your URL parameters to avoid this kind of problem. Go to Google Search Console > Crawl > Parameters.

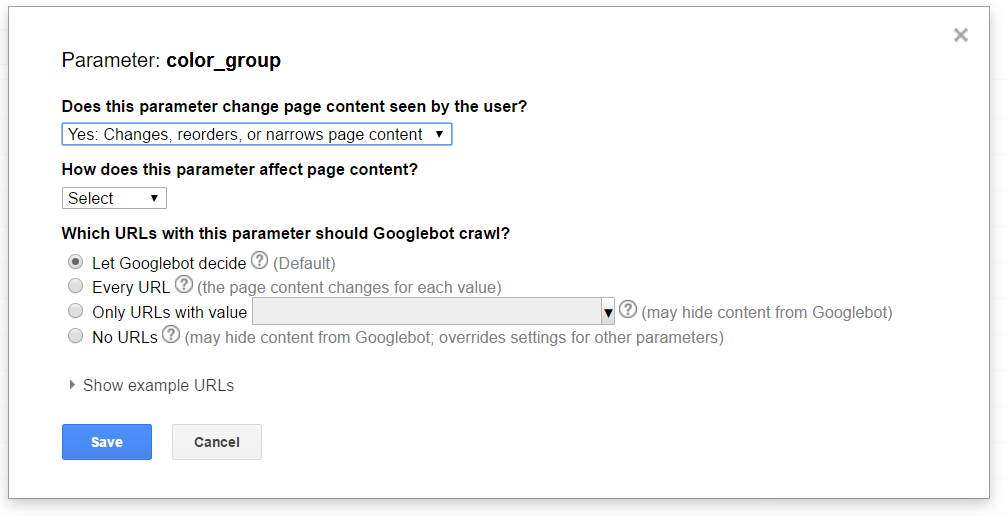

If your parameters are not already listed, click Add Parameter. Enter the name of the parameter exactly as it’s used on your site.

You’ll be prompted to answer the question “Does this parameter change page content seen by the user?” If not (common for tracking parameters), select “No.” If it does affect the page content, select “Yes.”

You’ll then need to tell Google how the parameter affects the page content. Your choices are: sorting, narrowing, specifying, translating, and paginating (see Search Console Help’s explanation of active parameters for details on these).

For the last question, “Which URLs with this parameter should Googlebot crawl?” use your best judgment. Unfortunately, letting Googlebot decide can results in massive duplicate content problems, so we only recommend that choice if there are a very small number of pages being generated by that parameter. Here are some guidelines:

- Pagination parameters – crawl every URL. You’ll want Google to pick up pagination tags and canonical tags to consolidate pagination sets.

- Sorting and narrowing parameters, including faceted navigation parameters – no URLs. The content should be available without a sort parameter attached, so you don’t need to allow it to crawl these. If there are categories only accessible via faceted search parameters, you need to whitelist them before blocking or hiding the facets.

- Product selection parameters – only URLs with one value, if the parameter will be necessary for Googlebot to see the product. This will depend on your platform.

Step 5 – Monitor performance

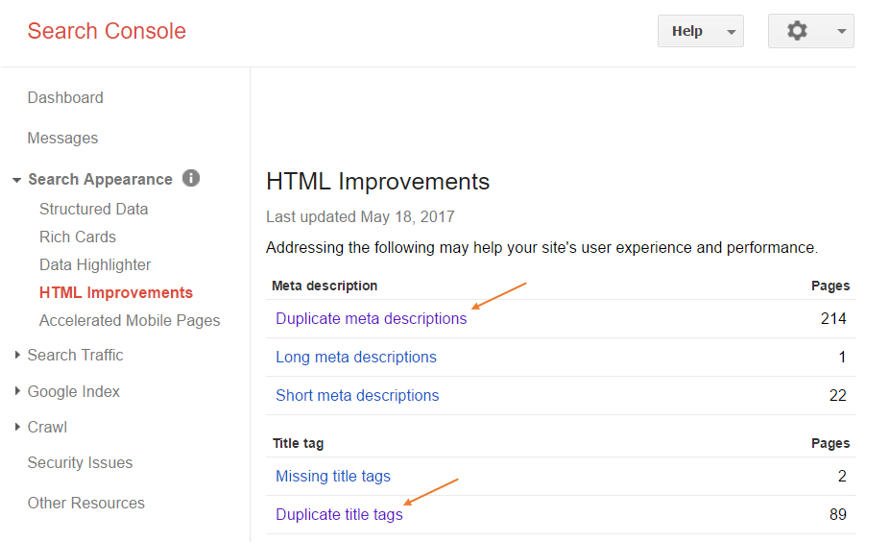

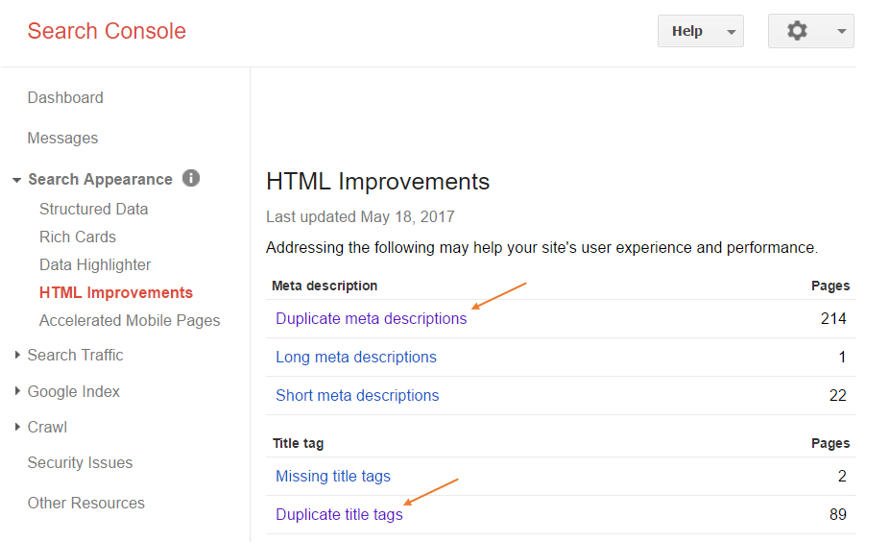

As with any SEO technique, you’ll want to monitor the performance of your site before and after you apply changes. Before applying the changes, check Search Console to see the extent of your duplicate content problem. Go to Search Console > Search Appearance > HTML Improvements. Check how many duplicate meta descriptions and title tags you have.

These numbers don’t necessarily reflect only duplicate pages, because the same meta description or title may have been used for multiple products, but this is an easy way to see your duplicate content decrease. You’ll also want to compare the traffic before and after the changes to see the benefit of consolidating your page reputation. Look at the traffic for a period before the change and after the change, allowing a month for Google to pick up the changes.

That’s it for this tactic! I like to focus on practical, free tips that anyone can use in this series. But the fact is that some duplicate content problems come from the ecommerce platform and are very difficult to work around. So I thought I’d briefly mention how our software corrects problems that are otherwise impossible to fix on certain platforms.

Without getting too technical, our software is able to make edits at the HTML level to your entire site. This means we can solve duplicate content problems in a variety of ways. Have a platform that creates duplicate content that’s impossible to get rid of? We can handle it. All of this is automated and happens in real time. It’s an extremely powerful SEO platform that does a lot of other things too, but for some clients, overcoming the limitations of their platform is the biggest attraction. If you’re interested in our software, feel free to request a trial.

Be sure to check out the previous tips in this series:

[/av_textblock]

[av_image src=’https://www.ranksense.com/wp-content/uploads/2017/05/request-a-free-SEO-scan.png’ attachment=’5262′ attachment_size=’full’ align=’center’ styling=” hover=” link=’manually,https://www.ranksense.com/workshop-list’ target=” caption=” font_size=” appearance=” overlay_opacity=’0.4′ overlay_color=’#000000′ overlay_text_color=’#ffffff’ animation=’no-animation’][/av_image]

[av_promobox button=’yes’ label=’Scan now!’ link=’manually,https://www.ranksense.com/free-scan/’ link_target=” color=’custom’ custom_bg=’#ffffff’ custom_font=’#1c98e0′ size=’large’ icon_select=’no’ icon=’ue800′ font=’entypo-fontello’ box_color=’custom’ box_custom_font=’#ffffff’ box_custom_bg=’#1c98e0′ box_custom_border=’#1c98e0′]

What SEO problems are hidden in your site? Our free SEO scan checks for 52 common issues. You’ll receive a full report explaining the problems we find and how to fix them. We include an unclaimed revenue calculator to estimate how much your SEO issues are costing you. Get your free report today!

[/av_promobox]